Is anyone else starting to get nervous about how fast technology is moving? Oh, everyone is? Okay, good. Maybe I just come from a simpler time when things like seeing Nathan Drake’s footstep in the snow were a technical marvel beyond our wildest dreams. Now we’re casually being exposed to realistic images hallucinated by LLMs and game engines that can create worlds so indistinguishable from reality, it makes me reconsider the validity of the simulation hypothesis.

At last week’s Game Developers Conference in San Francisco, the future of video game technology was on full display, with AI being the focal point of the entire week. Everyone is looking for ways to push graphics further, create new experiences, democratize game development, and most importantly, save as much money as possible, all by leveraging the power of AI.

Related

What’s The Difference Between AMD And Nvidia Graphics Cards?

Dive into a comprehensive comparison of AMD and Nvidia graphics cards.

Like it or not, AI is going to be a huge part of how games are made moving forward, and an equally huge part of how we experience them. Nvidia – the company responsible for producing those graphics cards no one can get their hands on – is already leading the industry in the development of AI hardware and software, so at this year’s GDC I met with the company to get a better understanding of where AI is, and where it’s going.

How Nvidia’s AI Is Changing Game Development And Gaming Experiences

If you have a reasonable fear of AI, this first demo is going to make you pretty uncomfortable. Nvidia gave me a live demonstration of its ACE digital human technologies, which uses generative AI to enhance NPCs, allow enemies to learn from your actions, and create emergent narrative experiences never before possible. The feature was demonstrated in inZOI, Krafton’s new Sims-like life simulator that allows you to handcraft dozens of NPC characters and let them lose in a simulated world.

Through Nvidia’s ACE, inZOI’s “smart Zois” are meant to have more realistic interactions with their environments. You can also give your NPC prompts that will inform how they behave and engage with other NPCs, reshaping both their personalities and the world around them.

To demonstrate this, the Nvidia rep gave one of her NPCs this prompt: “You are a character in a video game and you want everyone else to know about it.” I didn’t expect to watch an AI have an existential crisis first thing in the morning, but here we are. And, to be fair, it’s still a lot nicer than whatever the average gamer has in mind to do to their NPCs.

The character didn’t take on the prompt immediately, but after a bit of processing, you could see this piece of direction start to take root. A thought bubble over her head revealed that she’s starting to think about the limitations of her physical environment, now that she knows it’s all a simulation. When one of her friends comes by to talk to her, she notices that she’s acting strange, perhaps a little nervous. I couldn’t stick around long enough to get to the part where the AI starts stabbing everyone in the face to prove that nothing is real, but I could see where things were going.

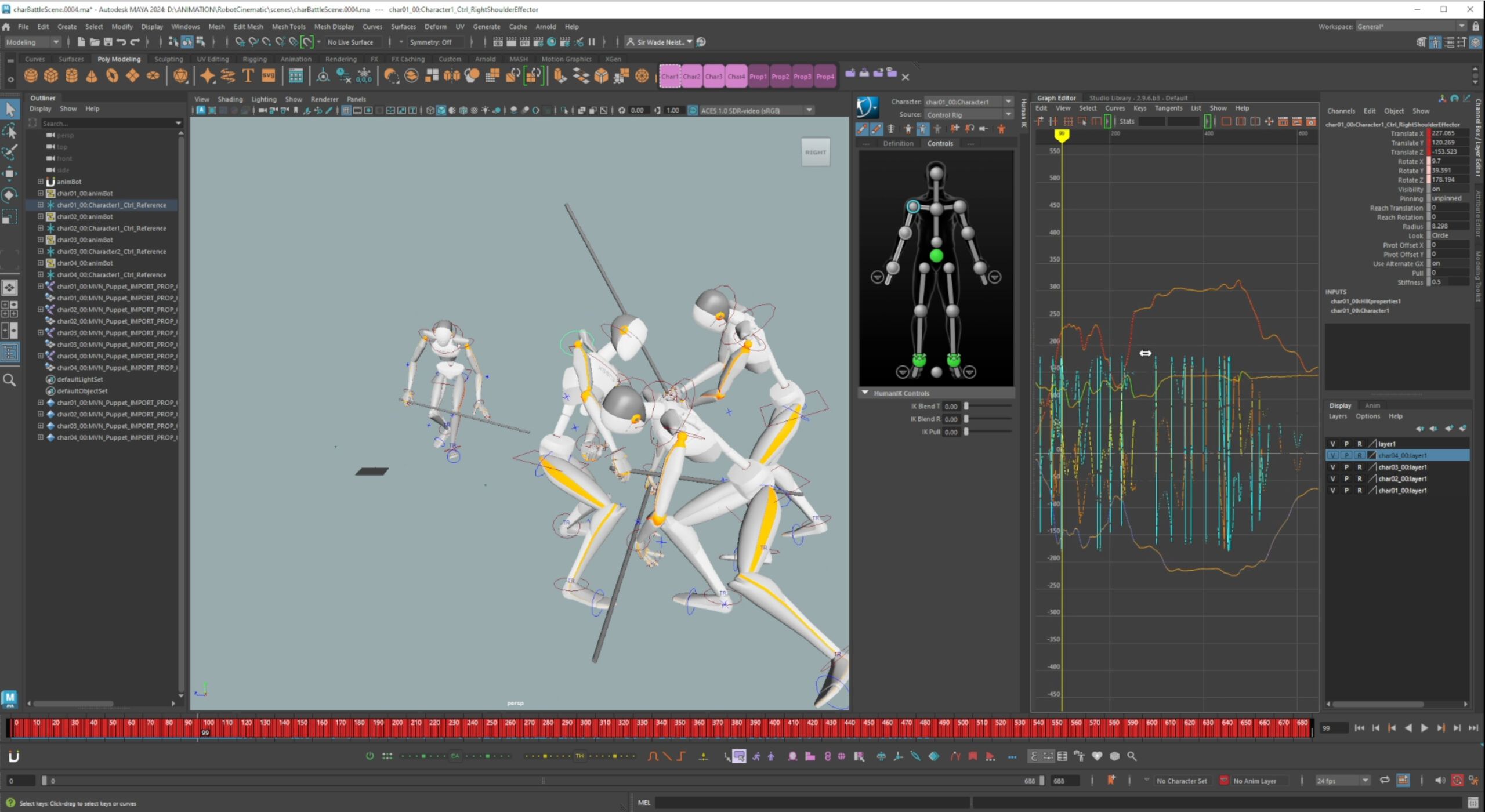

Making Character Animation Faster And Easier

On the developer side, Nvidia gave me a real-time demonstration of how its AI-powered Resolve plug-in can make animating characters faster and easier. With simple, plain language prompts, developers can generate half a dozen options for how they’d like a character to move in a matter of seconds, then easily implement it into their shot.

In the demonstration, the animator placed a character model in a gray box and gave it the prompt “step forward and jump over the table”. Moments later, the AI generated five different versions of the character stepping forward and jumping over a table, which the animator was then able to narrow down to the best option, and then tweak until its starting position, speed, and jump height were exactly the way he wanted.

It’s a tool that could be extraordinarily beneficial in motion capture, allowing animators to connect shots and scenes together much faster, or repair errors that would otherwise require either getting an actor back on a motion capture stage, or investing an incredible amount of time to hand animate – both things that may not be possible in a typical game development pipeline. While the tool is designed to aid the animation process rather than replace it, one can’t help but wonder what the next evolution of this kind of technology will look like.

Embracing And Fearing AI

As exciting and terrifying as generative AI is right now, the fact is that AI has been part of game development for a long time, and it’s a reason why some of our favorite games were even possible to make.

During the demo Nvidia also showed off DLSS 4. The latest version of the widely used upscaling feature is available on 50-series RTX cards in more than 100 games, using techniques like multi frame generation and ray reconstruction backed by an AI supercomputer in the cloud to make your games look better than they ever have before.

I’m a huge advocate for DLSS because it can extend the life of your hardware far beyond its natural capabilities. I think it’s going to be the Switch 2’s secret weapon, and it’s the kind of AI tech I’m very excited to see evolve.

There’s a dark side to AI, too. The kind that steals the hard work from humans, puts developers out of work, and makes the unconscionably rich unconscionably richer. What responsibility does Nvidia have to mitigate harm while driving full-force toward future technology? How do we know whether these advancements are moving us closer to self-actualization or oblivion? These are the things we’ll have to weigh as Nvidia leads us into an AI-driven future. It’s good to be wary, but it’s also okay to be amazed.

- Date Founded

-

April 1, 1993

- CEO

-

Jensen Huang

- Subsidiaries

-

Mellanox Technologies, Cumulus Networks, NVIDIA Advanced Rendering Center

- Headquarters

-

Santa Clara, California, United States

Leave a Reply