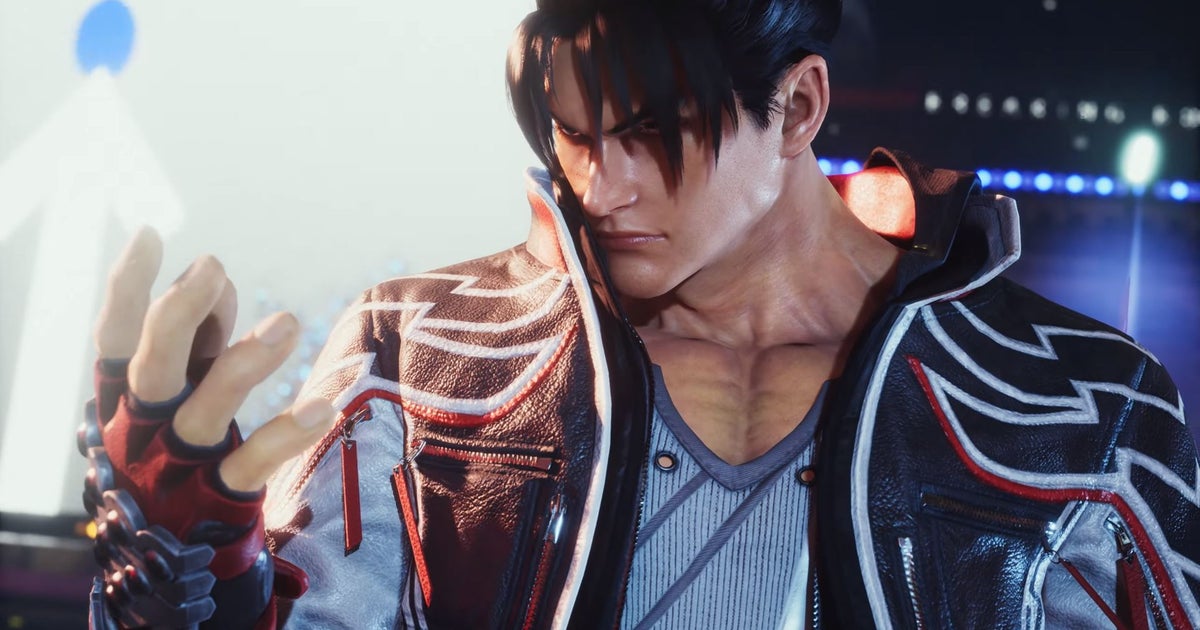

This week’s DF Direct Weekly – perhaps inevitably – kicks off with extended discussion on the Palworld phenomenon, while much time is spent mulling over the Horizon Forbidden West PC features trailer Sony ‘dropped’ last week. However, what caught my eye in putting this piece together was our discussion on input lag in the wake of John’s Tekken 8 coverage, where it emerged that Namco had delivered the most responsive Tekken game in the modern era. We don’t focus too much on input lag, but really we should, especially as we enter the next era of graphics technology.

Put simply, input lag is usually defined as the time taken between input from the player on his device of choice and that input playing out on-screen. Typically, the longer the delay, the more noticeable it is and the less enjoyable a game can be to play. Basic methodology in measuring it – in basic terms at least – has not changed since 2009, when ex-Neversoft (now UFO debunker) Mick West posted an article where he measured input lag by using a 60fps camera pointed at both joypad and screen. By moving frame by frame, you can see the precise frame where input is made and then you simply count the frames until the result is visible on-screen.

Methodology was tightened up with the creation of the controller monitor board, which tied button presses to LEDs on a board you positioned next to your screen, again using a high speed camera to measure the difference between device input and game response. Fast forward to 2017 and remarkable work by Nigel Woodall pushed the science to the next level. By converting HDMI to component video and then disabling two of the three components whenever the button was pressed (producing a small border on-screen for the duration of the press), the high-speed camera was no longer required – you could use video capture instead to get input lag measurements that removed much of the prior ambiguity in camer-based results. Frame counting? That’s still required, though Nigel has made some efforts on automation here.

- 0:00:00 Introduction

- 0:00:54 News 01: What’s up with Palworld?

- 0:25:45 News 02: Capcom adds Enigma DRM to older games

- 0:35:31 News 03: AMD releases Fluid Motion Frames tech

- 0:52:30 News 04: Horizon Forbidden West PC features detailed

- 1:05:22 News 05: Tekken 8: a superb fighter

- 1:15:53 News 06: Input lag deep dive

- 1:32:52 Supporter Q1: What would you recommend spec wise for an upper-midrange PC?

- 1:37:08 Supporter Q2: Should id Software commercialize their id Tech engine?

- 1:40:12 Supporter Q3: Is the time of 1080p monitors over?

- 1:43:55 Supporter Q4: Could Nintendo, Microsoft, or Sony develop their own Proton-like translation layers to run games from other platforms?

- 1:46:55 Supporter Q5: With John’s new Direct background, isn’t he worried about burn-in?

Ultimately though, what we’re seeing is improved methodology to get a tighter lock on an important part of the gameplay experience – especially for competitive titles. There are big implications for PC graphics card reviews as well. Nvidia and AMD are working hard on technologies like Reflex and AntiLag to reduce input lag. Typically input lag reduces as frame-rates go higher – but we’re now looking at scenarios where the more performant graphics card could possibly deliver slower-to-respond gameplay. Methodology here is somewhat simpler thanks to PC’s open approach: tools are available that can measure what Nvidia calls ‘PC latency’ as part of the benchmarking procedure.

The importance of input lag comes into even sharper focus as we move into the era of frame generation as the means by which to improve frame-rates, freeing up the GPU for next-level rendering – like path-tracing, for example. Current forms of frame-gen keep a frame in hand and have a processing cost of their own, two things that impact response. It’s widely accepted that the end-game of frame-gen is that interpolated frames will match the refresh rate of your display. However, right now at least, it’s a trade between game fluidity and response, so keeping input lag in check is vitally important – as is measuring it as part of a critique on game performance.

Nvidia’s ‘PC latency’ is useful as its prior methodology – LDAT – was in need of revision. In theory, LDAT should answer the question of how to measure input lag once and for all, by using a sensor attached to the display that measures a sudden change in luminance after a mouse button press, like the muzzle flash of a game in an FPS, for example. I used this to measure display latency improvements in Steam Deck OLED but it has one big limitation – if you’re not playing an FPS, finding an area of the screen to measure is immensely tricky. For that reason, we only use LDAT sparingly – and indeed, for a current piece we’re working on concerning cloud lag on the new PlayStation streaming service, we’ve reverted to the OG Mick West approach.

To see this content please enable targeting cookies. Manage cookie settings

One way or another, internal metrics like ‘PC latency’ will solve this issue for PC games, but the path to getting good numbers of consoles remains tricky. It’s likely the case that frame-counting will always be a part of the process, which is onerous, but is at least easier than pixel-counting (though it does require a somewhat convoluted hardware system). However, as we discuss in this week’s Direct, there is the sense that the controller itself desensitises general gameplay, opening up a wider window of what feels like ‘acceptable’ response to many people.

If we go back to Tom Morgan’s work back in 2017 based on Nigel Woodall’s component interrupt method, we can see that there’s a gulf in response between games running at 30fps and those running at 60fps. So far, so logical. However, his testing primarily looked at 60fps games and it’s remarkable to see a substantial divide in response time between games operating at the same frame-rate – a remarkable 47.5ms delta between Call of Duty: Modern Warfare (39.3ms) and Doom 2016 (86.8ms). And yet nobody had too many complaints at the time about Doom’s response.

This is just my personal theory, but I believe that the nature of the joypad itself dulls perception of fast response for many people and at the same time, even if Doom is laggier, if it’s consistently operating at the same level of response, people adjust to it quite quickly. It takes a game with truly poor response, or highly variable response – Killzone 2 on PS3, for example – for people to really start to cotton on that there’s something not quite right. The controller introduces other issues of course, which may feel like input lag problems but are something quite different – the dead zone areas on analogue sticks for example.

Generally speaking, a faster-to-respond game feels better to play, but for console titles at least, our current thinking is that it’s a case of doing the work when it ‘feels’ like something’s wrong – there’s seemingly a wide spread of seemingly acceptable input lag values and the response rates we get are often determined by aspects such as more complex game engines or double vs triple v-sync buffering. In the PC space though, it’s one of my missions for 2024 to more heavily integrate latency analysis into general testing – especially on graphics cards and as frame generation gains more traction.

In the meantime, enjoy Tekken 8 and if you are playing it, I’m curious if you notice the difference delivered by a crisper response – it’s almost 20ms quicker to respond than Tekken 7! I’d also recommend following Nigel Woodall and checking out his input lag database, which throws up a range of interesting results.

I also hope you enjoy this week’s DF Direct, which also includes some testing on AMD’s Fluid Motion Frames driver, which brings frame generation to every DX11 or DX12 game out there (with certain caveats!). Putting together the show is one of my highlights of the week, and if you do want to get involved, please do check out the DF Supporter Program for your chance to help shape the show and get early access every weekend.

function appendFacebookPixels() { if (window.facebookPixelsDone) return; !function(f,b,e,v,n,t,s) {if(f.fbq)return;n=f.fbq=function(){n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments)}; if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0'; n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0]; s.parentNode.insertBefore(t,s)}(window, document,'script', 'https://connect.facebook.net/en_US/fbevents.js');

fbq('init', '560747571485047');

fbq('track', 'PageView'); window.facebookPixelsDone = true;

window.dispatchEvent(new Event('BrockmanFacebookPixelsEnabled')); }

window.addEventListener('BrockmanTargetingCookiesAllowed', appendFacebookPixels);

Source link

Leave a Reply